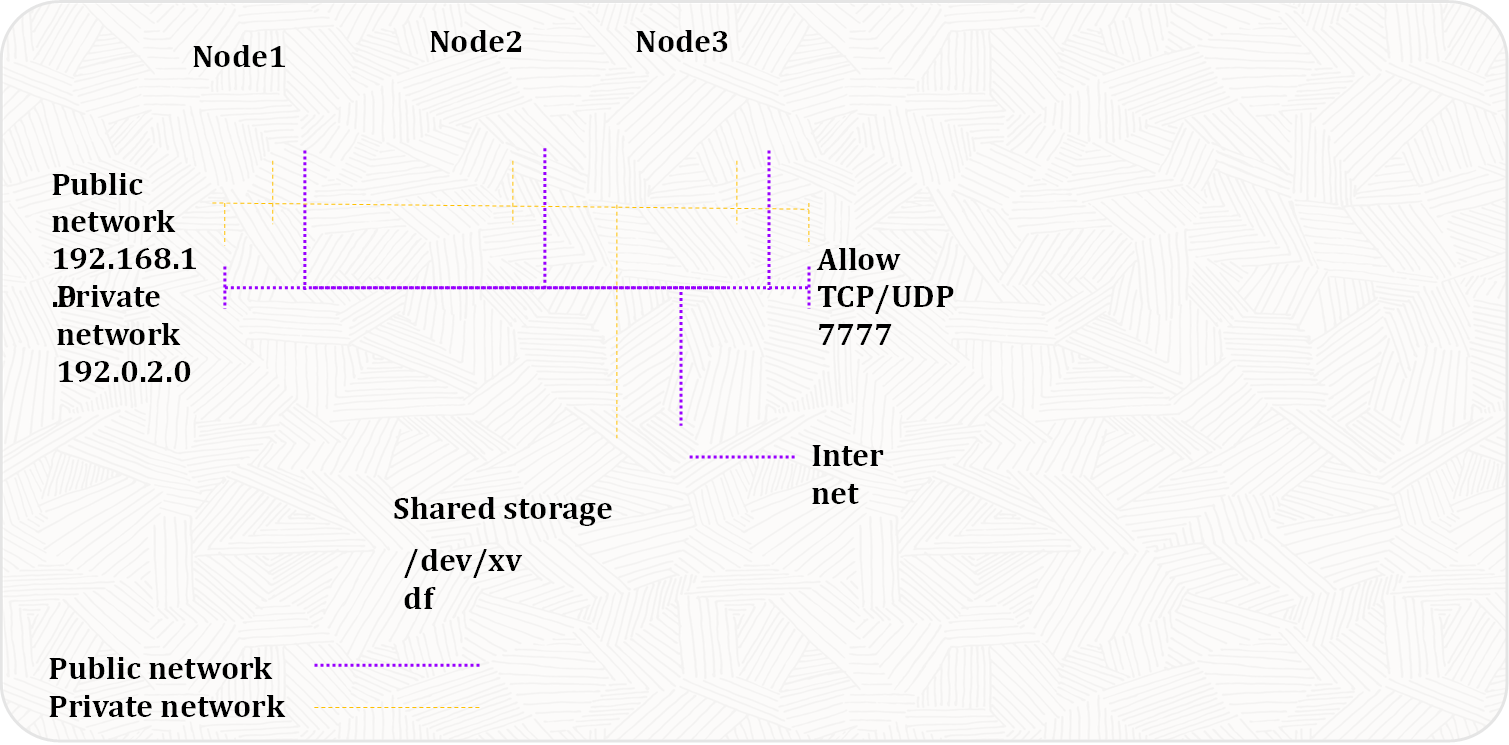

So let’s understand a sample configuration with shared storage for cluster nodes, a private network between the nodes (192.168.1.), and the requirement to allow access on the private network for TCP and UDP port 7777.

Each node in the cluster requires access to shared storage. For cluster file systems, typically this shared storage device is a shared SAN disk, an iSCSI device, or a shared virtual device on an NFS server.

In the example, each node (guest VM) shares /dev/xvdf as configured with the following entry in the vm.cfg files for each VM:

‘file:/vm/osimage/<vm_name>/gcol7u5sysadm3physdiskx1/image_files/gcol7u5sysadm3physdiskx1.img,xvdf,w’

The entries in all vm.cfg files point to the same .img file with the same xvdf identifier.

OCFS2 requires the nodes to be alive on the network and sends regular keepalive packets (heartbeat) to ensure that they are alive. A private interconnect is recommended to avoid a network delay being interpreted as a node disappearing on the network, which could lead to node self-fencing. You can use OCFS2 without using a private network, but such a configuration increases the probability of a node fencing itself out of the cluster due to an I/O heartbeat timeout.

Assuming a sample setup, each node has one or more network interfaces on the public network (192.0.2.0) and an interface on the private network (192.168.1.0). For example:

- host01 uses eth1 with private IP address of 192.168.1.101

- host02 uses eth1 with private IP address of 192.168.1.102

- host03 uses eth2 with private IP address of 192.168.1.103

The O2CB cluster also requires firewalld to be disabled or modified to allow network traffic on the private network interface. By default, the cluster uses both TCP and UDP over port 7777. This port number is specified in the cluster configuration file. You can either trust this port number or disable firewalld.

Recent Comments